I am a pragmatic person at heart, and perhaps because of that I have a hard time seeing the value of debating so much about terminology. We've seen so much about that when talking about Software Defined Networking (SDN). What SDN is and is not, etc.

Now we are seeing a lot of debate about what is a white box networking and what is not. And new terms arise as well: brite box, white brand, … I am sure I am missing some.

There is an irony in all of this in that white boxes aren't white. I invite the reader to checkout the models from Acton, Quanta, Penguin, … they are all black!

|

| A black whitebox switch |

Leaving the joke aside, I do understand that semantics matter. But at the same time I think we waste time discussing so much about terminology. I think people end up being confused, and sometimes I wonder if certain companies promote that confusion.

What is the point about white-whatever network devices?

I think (and I believe most readers would agree), it's about disaggregation. Disaggregation is about having choice: the choice of running any network OS on any network hardware. Like you do with servers, where you can buy a server from Cisco, and run an OS from Red Hat, or Microsoft, etc. The expectation is that with disaggregation will come cost savings. That last part is not a given, of course, and it is where the "white" part comes in. For if you can run your Network OS of choice on a cheap "whitebox" piece of hardware, you should be able to save money. But the point is really about being able to run any Network OS on any hardware (ideally). This is all assuming of course that all hardware is equal and of minimal value, so you can use cheapest hardware. Because the value is in the software alone. I personally disagree with this reasoning.

Then of course, some people like to (over)simplify things, and they make it all about the boot loader. If you do ONIE, you are cool. ONIE alone means you are disaggregated, open, whitebox, and cool. If you don't do ONIE, then you are not open, you are not whitebox .. whatever. To me this is like when you were at school and you always wanted to play to whatever that cool popular boy that you admired was to propose as a game.

It is almost impossible to imagine that the networking industry is different from the server industry simply because we did not have an open boot loader, and that it will change now that ONIE is around.

In the end the objective seems to be that you are able to run any network OS in any network hardware. That is disaggregation. Mind you, I am not saying that disaggregation is good or bad. Simply stating what is the "new" thing with disaggregation.

Whitebox or not? … well … imagine that you run Open Network Linux (ONL) on an Arista branded box. How do we call that? … I am sure there's a name for it. Someone will come up with one. But I think It does not really matter (It is also not possible anyways, at least not for now, and if it was possible, I hardly see any value on it).

Now that we agree that the point is about disaggregation (and we still have not debated about whether that is good or not, or to what extent it is happening), let's also talk about what is not the point of the "whitebox" discussion. SDN is not the point.

I don't want to get into a heated semantic debate of what SDN is and is not. However, the goal of SDN was to simplify and/or change the way we do networking. It was about getting rid of all that box by box configuration of discrete network units running legacy network control protocols. Wasn't it? … I think we will all agree that if I run ONL on a Quanta box and I build my network using spanning tree, I can't say I am doing SDN. It is a different thing of course if I use ONL on a Quanta box with an ODL controller to build L2 segments. So it's clear to me that we are talking of two different things: SDN is not about disaggregation, and disaggregation is not about SDN.

Is disaggregation in the network industry happening?

It is, and it always has been. I remember attending Supercom in Atlanta back in 2000 and talking to a taiwanese company that offered "whitelabel" (yet another term!) switches. I could have started my own networking company, and produce NilloNet branded switches. "What OS can I run on my NilloNet switches then?" I asked the kind sales person at the booth … "Well, we provide you with one that we could even customise with your brand, or you can bring your own". Impressive. A booming industry we had, back in the year 2000.

But anecdotes aside, clearly what is happening today is somewhat different. ODM vendors have been there for long time. Merchant silicon is not new either. What was missing was a decent network operating system for running on ODM-provided hardware. There were Network OSes you could use. I experienced with Xorplus a while back (maybe in 2010?) … But I was … well … less than impressed. But these days there are more options to do traditional networking on ODM-provided hardware. Better options too.

The server industry and the networking industry are very different

If we look at the server industry, any server has easily a dozen different OS options on the list of supported software, if not more. This has been the case for ages now. And yet, this has not translated into ODM-provided servers becoming dominant. Quite the contrary. People primarily buy branded servers from HP, Cisco, Dell … And for good reasons. For quality, logistics and primarily operational reasons customers see greater value in certain brands. The promise that running low-cost whitebox hardware saves you money has not proved yet true for Enterprise customers.

Of course, on the networking side of the house, things are still different: you cannot choose your network OS with your hardware of choice (and for good reasons IMHO).

But .... this will change, some say. This is already changing, others are saying ... Promoters of the disaggregated model are quick to point out the Dell S6000 (the ONIE model supports only two options, Cumulus Linux and Big Switch Light OS), the Juniper OCX1100 (but it only supports JunOS), or the recently announced HP support for Cumulus Linux. Actually, no HP switch supports Cumulus Linux today (not that I know off at least. The educated reader will be so kind as to correct me). Instead, HP resells one of the Acton models that are on the Cumulus Hardware Compatibility List (HCL). So that if you buy that switch model through HP, you get an HP supported open platform that can run … Cumulus Linux (No, apparently, it cannot run HP's Comware … only Cumulus Linux).

This is what is happening. So much for choice …

I am sure the skeptical reader will be sharp to point out that "these are early days"."Wait and see in one year". Sure they are early days.

Will we get to the point where we see a switch from a vendor (any vendor, white or coloured) running any Network OS? Just imagine, the ordering web page of a hardware company, ... a drop down menu where we choose the operating system from a list with NX-OS, JunOS, EOS, Cumulus Linux, FTOS, … Well, I am very skeptic that we will ever see that day. And I don't think customers are generally looking for that either.

Why the network disaggregation is not like the server disaggregated model

It is always nice to use parallelisms to draw analogies in order to explain something. But parallelisms can be deceptive too. We have seen this before with those that were comparing the server virtualisation industry with "rising" network virtualisation: a great marketing message, a clear failure (look back to 2011, and look at us today ...).

I believe we may be in the same situation whenever we talk of switch disaggregation and compare with server disaggregation. We are comparing similar processes on two industry that are related, but not quite the same.

I think it is very different for three reasons.

First, volume. The server industry is much much larger than the networking industry. This is very evident, but to illustrate it in any case, the reader can think that in every standard rack (if you have proper cooling) you can fit up to 40 1RU servers, and two 1RU ToR switches. It is very clear that the number of physical servers in any datacenter outnumbers the number of network switch devices by multiples of an order of magnitude. The larger volume creates a different dynamic for vendors to deal with margins, R&D, cost of integration and validation of software, etc.

Second reason, servers are general purpose. A server runs a general purpose CPU to run a general purpose operating system to run many different applications. This means that there is both a need, and an interest, of developing multiple operating systems (on a market that has a large volume). A market that is large enough for many large and small players to be profitable on the software and on the hardware side. Networking devices on the other hand have as main purpose (solely purpose perhaps), to move packets securely and efficiently, with minimal failures. This requires specialised hardware. That leads to our next point …

Third reason, lack of an industry standard instruction-set for networking hardware. In the server industry, x86 architecture prevails. It does not take a lot of effort to ensure you can run RHEL, Windows, ESXi, Hyper-V, etc. on a HP server or on a Cisco server because both servers use the same processors. Granted, you need to develop drivers for specific vendor functions or hardware (NICs for instance, power management, etc.) but the processor instruction set is always the same.

In the networking industry the same is not true, at all. Back in 2011, some people thought that OpenFlow was going to be

"the x86 of networking". I for one was certain that would not be the case. I think we can all agree today that indeed it isn't the case. But why, and what does it mean for the network vendors? … As for the why, leaving aside the limitations of OpenFlow itself, there is little to no interest at all in the merchant silicon vendors to agree on a common architecture.

Broadcom, Intel, Marvell, Cavium … they all have their own hardware architectures, and their own SDK, and try to differentiate their offering keeping it that way. In some cases (in most cases), there is even more than one SDK and hardware family within a single vendor offering.

For a Network OS vendor this means that you need to develop for multiple ASICs, which translates into greater development efforts, and inconsistent feature sets. Take a look at Cumulus Linux for instance. Switchd today works on a Broadcom chip family, but does not work yet on other Broadcom chip families, or on Intel, Cavium, etc.

At this point some readers may be thinking "well, but ultimately, everybody uses Broadcom Trident chips, that's all that is needed anyways. So Broadcom will provide the standard de-facto". Not really. Vendors seek for differentiation, and will strive to find it. And contrary to the common mantra of the day, differentiation and value do not come exclusively from software. Hardware is needed. Hardware is not but a necessary evil, as some think of it. It is part of the solution to bring value.

As soon as all vendors have table stakes in hardware (by using the same chips), those vendors will seek to add value to avoid a race to the bottom. Those with the capability to try and create additional value by developing better hardware will inevitably do it. Those without it, will seek to use a different merchant vendor offering.

Why else does JNPR work to bring up a new line of switches using their own silicon? … Brocade also developed their own ASICs on certain switches. And if you look at Arista, they currently have products using Intel chips to provide a differentiated product line, and there are rumours that they are working on

adding Cavium to the list. In a way, in the end, all these vendors are following the path set by Cisco combining merchant offerings with better silicon (in the case of Cisco, its own silicon).

The challenge then, from a disaggregation point of view, is that you need to develop network operating systems that work with very dissimilar hardware platforms underneath. This is a substantial development effort (alongside with its associated ongoing support). Add to that the fact that networking hardware tends to have a much longer lifetime than server hardware to make it even more complicated.

[As an anecdote to illustrate an extreme case of this point, last year I dealt with a customer who was running a non-negligible part of their datacenter on good old … Catalyst 5500s!! … These equipment have been in service since the late 90s ... How about that?

(An interesting side thought about that: if this customer would have chosen ANY of the competitive offerings to the Catalyst 5500 back in the day, they'd be running equipment from companies that do no longer exist. Although in all probability, if that had been case, they would not have been able to run that hardware for so long at all)]

Net net, when you think that the networking market is (a) an order of magnitude smaller than the server market, (b) the number of players developing for it is also smaller and (c ) there is no standard for network hardware chips, I think it is very unlikely that we will get to see a disaggregation like we've seen in the server industry.

Aren't new software players are changing that

Some may have read about

IPInfusion launching its own networking operating system. I don't think this changes anything I've written so far. I look at this like I look at Arista developing EOS to run on multiple hardware merchant vendors (Intel, Broadcom ...). It is definitely possible, and it requires significant development investment with additional support cost.

Ultimately, the point is whether they will be able to deliver more value than a vendor that integrates their offering with their hardware (potentially better hardware).

I see this move from IP Infusion more as response to them being disrupted, than as being part of the disruption. I may be wrong of course, but IP Infusion builds their business on selling protocol stacks to companies that can't develop them by themselves, or chose not to do it for whatever reason. Cisco, JNPR, ALU may have their own routing stacks (BGP, OSPF,...), MPLS stacks, etc. Others rely on companies like IP Infusion for "acquiring" a protocol stack that they can't develop. If that "others" part of the market is now competing with the likes of Cumulus, those parts of the protocol stack are being filled-in by open source projects like Quagga and so on. So I think this is a response to try and stay competitive in that part of the market.

But disaggregation IS happening

So many people say it, it has to be true! ... Again, yes it is. It always was. It always had a part of the market. A very small part of the market. Now that part of market may be larger because cloud providers in particular may be interested in that model and its complications. I keep writing "may" because this is not a given. To explain why, I'd like to also separate another concept here. Just like SDN is not disaggregation, and disaggregation is not SDN, disaggregation is not Linux.

Many who think of Cumulus Networks think of white boxes. Therefore they think customers who buy into Cumulus Linux do it to buy cheaper hardware from white box vendors. But Cumulus does not sell white boxes. They sell software subscriptions. White boxes are a vehicle, a route to market (a necessary one for Cumulus). The value proposition for Cumulus is not really so much about disaggregation, I think. I believe that disaggregation is a great eye catcher to generate attention, to open the door, to create debate and confront established players. The value proposition is about providing a credible networking offering using Linux. Not an operating system that uses Linux, but Linux networking. It is about managing your network devices like you manage your servers. Now that is a much more interesting thought. One that is not for everybody, at least not at the moment.

Conclusion

All the above is just a brain dump. Nothing more. Food for thought. I believe that disaggregation of the networking industry is a big hype at the moment. This hype, and the semantics battle around it are awesome for analysts, bloggers and to some extent investors and vendors in order to create debate, and offer their products along the way (yes, they

all offer their products alongs the way …).

Ultimately, competition is great. New players, ODM vendors with new OS vendors, are all welcomed. The more competition, the better for customers, the better for the industry, the better for everybody.

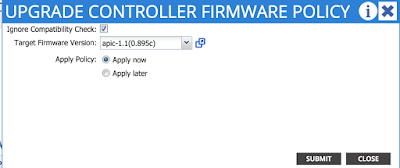

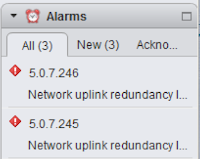

Of course when the leaf switches reboot, the end hosts will see a link down, so in order to avoid service interruptions they must be dual homed (one port to a switch with an even ID, one to a switch with an odd ID - hence our upgrade policy). In our case, the hosts are running ESXi, we see the link down flagged as an alarm:

Of course when the leaf switches reboot, the end hosts will see a link down, so in order to avoid service interruptions they must be dual homed (one port to a switch with an even ID, one to a switch with an odd ID - hence our upgrade policy). In our case, the hosts are running ESXi, we see the link down flagged as an alarm: